Milk-V Duo S 硬件解码H264并在LCD屏幕上显示

一、概述

拿到duos后想深入学习下Linux,尝试下硬件解码h264,在经过了一周多断断续续的摸索之后,也算简单完成了对h264解码并在lcd屏幕上显示的demo。

全部代码在第四节,我也fork了SDK并把对SDK的修改上传到了自己的GitHub上cvitek-tdl-sdk-sg200x,也是为了方便自己后面学习tdl。

演示视频,希望多点赞()。

二、前期准备

1.配置交叉编译工具链

因为我使用的是rk3588进行编译,所以要单独下载arm平台的工具链,参考教程:

M1-ARM64-Debian11/UOS-v20 编译工具链已准备好, 伙计们开干吧!。

2.添加lcd屏驱动到内核中并编译镜像

我使用的lcd主控是ili9488,参考教程:

Milk-V Duo tinydrm驱动屏幕(ili9488/st7789)。

不同的屏幕分辨率也不一样,需要修改在LCD上面刷新图像的代码有些影响。

3.编译ffmpeg相关动态链接库

克隆ffmpeg仓库

git clone https://git.ffmpeg.org/ffmpeg.git

使用configure配置Makefile,因为怕忘了参数所以我写了个脚本配置Makefile。

CC和CXX是工具链的路径,PREFIX是安装的路径。我打算先把动态链接库安装在rk3588上,方便后面调用。

#!/bin/bash

CC=/home/lyy/milkv-sdk/duo-examples/duo-sdk/riscv64-linux-musl-arm64/bin/riscv64-unknown-linux-musl-gcc

CXX=/home/lyy/milkv-sdk/duo-examples/duo-sdk/riscv64-linux-musl-arm64/bin/riscv64-unknown-linux-musl-g++

PREFIX=/home/lyy/milkv-sdk/duo-examples/ffmpeg/output/

export PKG_CONFIG_PATH=/home/lyy/milkv-sdk/duo-examples/x264/prefix/lib/pkgconfig

./configure --cc=${CC} --cxx=${CXX} --prefix=${PREFIX} --extra-ldflags=-L/home/lyy/milkv-sdk/duo-examples/x264/prefix/lib \

--enable-cross-compile --disable-asm --enable-parsers --disable-decoders --enable-decoder=h264 --enable-libx264 --enable-gpl --enable-decoder=aac \

--disable-debug --enable-ffmpeg --enable-shared --disable-static --disable-stripping --disable-doc

这里面我加了x264编码库,也要单独编译,可以去掉。

./configure --cc=${CC} --cxx=${CXX} --prefix=${PREFIX} \

--enable-cross-compile --disable-asm --enable-parsers --disable-decoders --enable-decoder=h264 --enable-gpl --enable-decoder=aac \

--disable-debug --enable-ffmpeg --enable-shared --disable-static --disable-stripping --disable-doc

配置完后编译安装到上面指定的目录。

make && make install

4、下载Cvitek MFF SDK并修改Makefile

git clone https://github.com/milkv-duo/cvitek-tdl-sdk-sg200x.git

因为我把代码放在了./cvitek-tdl-sdk-sg200x/sample/cvi_tdl里面,所以修改里面的Makefile。

cd ./cvitek-tdl-sdk-sg200x/sample/cvi_tdl && vi Makefile

我把代码取名为sample_my_*.c,就模仿sample_vi_*添加相关内容。

同时也需要在CFLAGS后面添加ffmpeg头文件路径。

CFLAGS += -I$(SDK_INC_PATH) \

-I$(SDK_TDL_INC_PATH) \

-I$(SDK_APP_INC_PATH) \

-I$(SDK_SAMPLE_INC_PATH) \

-I$(SDK_SAMPLE_UTILS_PATH) \

-I$(RTSP_INC_PATH) \

-I$(IVE_SDK_INC_PATH) \

-I$(OPENCV_INC_PATH) \

-I$(STB_INC_PATH) \

-I$(MW_SAMPLE_PATH) \

-I$(MW_ISP_INC_PATH) \

-I$(MW_PANEL_INC_PATH) \

-I$(MW_PANEL_BOARD_INC_PATH) \

-I$(MW_LINUX_INC_PATH) \

-I$(MW_INC_PATH) \

-I$(MW_INC_PATH)/linux \

-I$(AISDK_ROOT_PATH)/include/stb \

-I/home/lyy/milkv-sdk/duo-examples/ffmpeg/output/include #ffmpeg

#-I后面是ffmpeg安装后库函数的绝对路径

……

TARGETS_APP_SAMPLE := $(shell find . -type f -name 'sample_app_*.c' -exec basename {} .c ';')

TARGETS_YOLO_SAMPLE := $(shell find . -type f -name 'sample_yolo*.cpp' -exec basename {} .cpp ';')

TARGETS_MY_SAMPLE := $(shell find . -type f -name 'sample_my_*.c' -exec basename {} .c ';')

#参考前面的

TARGETS = $(TARGETS_SAMPLE_INIT) \

$(TARGETS_VI_SAMPLE) \

$(TARGETS_AUDIO_SAMPLE) \

$(TARGETS_READ_SAMPLE) \

$(TARGETS_APP_SAMPLE) \

$(TARGETS_YOLO_SAMPLE) \

$(TARGETS_MY_SAMPLE)

……

sample_vi_%: $(PWD)/sample_vi_%.o \

$(SDK_ROOT_PATH)/sample/utils/vi_vo_utils.o \

$(SDK_ROOT_PATH)/sample/utils/sample_utils.o \

$(SDK_ROOT_PATH)/sample/utils/middleware_utils.o \

$(SAMPLE_COMMON_FILE)

$(CC) $(CFLAGS) -g $(SAMPLE_APP_LIBS) -o $@ $^

sample_my_%: $(PWD)/sample_my_%.o \

$(SDK_ROOT_PATH)/sample/utils/vi_vo_utils.o \

$(SDK_ROOT_PATH)/sample/utils/sample_utils.o \

$(SDK_ROOT_PATH)/sample/utils/middleware_utils.o \

$(SAMPLE_COMMON_FILE)

$(CC) $(CFLAGS) $(SAMPLE_APP_LIBS) -g -o $@ $^ -L/home/lyy/milkv-sdk/duo-examples/ffmpeg/output/lib -lswscale -lx264 -lpostproc -lswresample -lavfilter -lavcodec -lavformat -lavutil -lavdevice

#-L后面添加动态链接库位置 -lx264可以去掉

然后PATH添加交叉编译链的bin文件夹,启动compile_sample.sh脚本编译看看能不能正常使用。

cd ../

PATH=$PATH:/home/lyy/milkv-sdk/duo-examples/duo-sdk/riscv64-linux-musl-arm64/bin

./compile_sample.sh

三、代码讲解

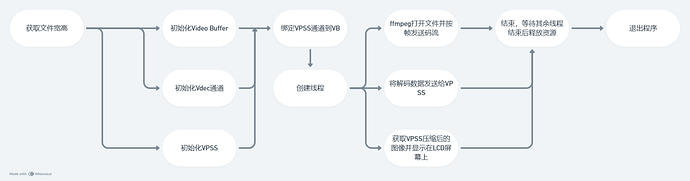

1.基本流程

此次使用的是VDEC通道0,VPSS Grp0和输出通道0与1。

为什么还要单独将vdec解码的帧发给VPSS?因为我发现blind将vdec和vpss连通后并没有起作用,但同时还需要VPSS将帧缩放,所以只能再发送到VPSS上处理。

2.利用ffmpeg读取h264文件宽高

_UNUSED static SIZE_S getVideoWH(char *filePath){

SIZE_S size = {

.u32Height = 0,

.u32Width = 0

};

AVCodecParameters *origin_par = NULL;

AVFormatContext *fmt_ctx = NULL;

int result, video_stream;

result = avformat_open_input(&fmt_ctx, filePath, NULL, NULL);

if (result < 0) {

av_log(NULL, AV_LOG_ERROR, "Can't open file\n");

goto get_video_info_err;

}

result = avformat_find_stream_info(fmt_ctx, NULL);

if (result < 0) {

av_log(NULL, AV_LOG_ERROR, "Can't get stream info\n");

goto get_video_info_err;

}

video_stream = av_find_best_stream(fmt_ctx, AVMEDIA_TYPE_VIDEO, -1, -1, NULL, 0);

if (video_stream < 0) {

av_log(NULL, AV_LOG_ERROR, "Can't find video stream in input file\n");

goto get_video_info_err;

}

origin_par = fmt_ctx->streams[video_stream]->codecpar;

size.u32Height = origin_par->height;

size.u32Width = origin_par->width;

avformat_close_input(&fmt_ctx);

return size;

get_video_info_err:

return size;

}

SIZE_S srcSize = getVideoWH(argv[1]);

if(srcSize.u32Width == 0 || srcSize.u32Height == 0) return 0;

SIZE_S dstSize = { //VPSS

.u32Width = 384,

.u32Height = 288

};

3.初始化Video Buffer

static CVI_S32 VBPool_Init(SIZE_S chn0Size, SIZE_S chn1Size){

CVI_S32 s32Ret;

CVI_U32 u32BlkSize;

VB_CONFIG_S stVbConf;

memset( &stVbConf, 0, sizeof(VB_CONFIG_S));

stVbConf.u32MaxPoolCnt = 3;

u32BlkSize = COMMON_GetPicBufferSize(chn0Size.u32Width, chn0Size.u32Height, VDEC_PIXEL_FORMAT, DATA_BITWIDTH_8,

COMPRESS_MODE_NONE, DEFAULT_ALIGN);

stVbConf.astCommPool[0].u32BlkSize = u32BlkSize;

stVbConf.astCommPool[0].u32BlkCnt = 3;

u32BlkSize = COMMON_GetPicBufferSize(chn1Size.u32Width, chn1Size.u32Height, PIXEL_FORMAT_RGB_888, DATA_BITWIDTH_8,

COMPRESS_MODE_NONE, DEFAULT_ALIGN);

stVbConf.astCommPool[1].u32BlkSize = u32BlkSize;

stVbConf.astCommPool[1].u32BlkCnt = 3;

attachVdecVBPool(&stVbConf.astCommPool[2]);

s32Ret = SAMPLE_COMM_SYS_Init(&stVbConf);

if (s32Ret != CVI_SUCCESS){

printf("system init failed with %#x!\n", s32Ret);

return CVI_FAILURE;

}else{

printf("system init success!\n");

}

return CVI_SUCCESS;

}

4.配置Vdec通道

pstChnAttr->enMode要选择VIDEO_MODE_FRAME,一帧一帧的发送。

官方CV180x/CV181x 媒体软件开发指南上说目前只支持VIDEO_MODE_FRAME,但是调用sample_common_vdec.c里的SAMPLE_COMM_VDEC_StartSendStream函数又无法正确发送帧。我试了会花屏,所以后面单独发送帧的线程我加上了ffmpeg获取每帧的数据。

#define VDEC_STREAM_MODE VIDEO_MODE_FRAME

#define VDEC_EN_TYPE PT_H264

#define VDEC_PIXEL_FORMAT PIXEL_FORMAT_NV21

static CVI_S32 setVdecChnAttr(VDEC_CHN_ATTR_S *pstChnAttr,VDEC_CHN VdecChn,SIZE_S srcSize){

VDEC_CHN_PARAM_S stChnParam;

pstChnAttr->enType = VDEC_EN_TYPE ;

pstChnAttr->enMode = VDEC_STREAM_MODE ;

pstChnAttr->u32PicHeight = srcSize.u32Height ;

pstChnAttr->u32PicWidth = srcSize.u32Width ;

pstChnAttr->u32StreamBufSize = ALIGN(pstChnAttr->u32PicHeight * pstChnAttr->u32PicWidth, 0x4000);

CVI_VDEC_MEM("u32StreamBufSize = 0x%X\n", pstChnAttr->u32StreamBufSize);

pstChnAttr->u32FrameBufCnt = 3 ;//参考帧+显示帧+1

CVI_VDEC_TRACE("VdecChn = %d\n", VdecChn) ;

CHECK_CHN_RET(CVI_VDEC_CreateChn(VdecChn, pstChnAttr), VdecChn, "CVI_VDEC_SetChnAttr");

printf("CVI_VDEC_SetChnAttr success\n");

CHECK_CHN_RET(CVI_VDEC_GetChnParam(VdecChn, &stChnParam), VdecChn, "CVI_VDEC_GetChnParam");

printf("CVI_VDEC_GetChnParam success\n");

stChnParam.enPixelFormat = VDEC_PIXEL_FORMAT;//设置VDEC输出像素格式

stChnParam.enType = VDEC_EN_TYPE ;

stChnParam.u32DisplayFrameNum = 1 ;

CHECK_CHN_RET(CVI_VDEC_SetChnParam(VdecChn, &stChnParam), VdecChn, "CVI_MPI_VDEC_GetChnParam");

printf("CVI_MPI_VDEC_GetChnParam success\n");

CHECK_CHN_RET(CVI_VDEC_StartRecvStream(VdecChn), VdecChn, "CVI_MPI_VDEC_StartRecvStream");

printf("CVI_MPI_VDEC_StartRecvStream success\n");

return CVI_SUCCESS;

}

5.初始化VPSS Grp

在这里我blind了VDEC和VPSS,但是并没有生效。

要设置GRP源和Chn通道的宽高和像素格式。

static CVI_S32 setVpssGrp( VPSS_GRP VpssGrp, CVI_BOOL *abChnEnable, SIZE_S srcSize, SIZE_S dstSize){

CVI_S32 s32Ret;

VPSS_GRP_ATTR_S stVpssGrpAttr ;

memset(&stVpssGrpAttr,0,sizeof(VPSS_GRP_ATTR_S));

VPSS_CHN_ATTR_S astVpssChnAttr[VPSS_MAX_PHY_CHN_NUM] ;

VPSS_GRP_DEFAULT_HELPER2(&stVpssGrpAttr, srcSize.u32Width, srcSize.u32Height, VDEC_PIXEL_FORMAT, 1);

VPSS_CHN_DEFAULT_HELPER(&astVpssChnAttr[0], srcSize.u32Width, srcSize.u32Height, PIXEL_FORMAT_NV21, true);

VPSS_CHN_DEFAULT_HELPER(&astVpssChnAttr[1], dstSize.u32Width, dstSize.u32Height, PIXEL_FORMAT_RGB_888, true);

CVI_VPSS_DestroyGrp(VpssGrp);

s32Ret = SAMPLE_COMM_VPSS_Init(VpssGrp, abChnEnable, &stVpssGrpAttr, astVpssChnAttr);

if (s32Ret != CVI_SUCCESS) {

printf("init vpss group failed. s32Ret: 0x%x !\n", s32Ret);

return CVI_FAILURE;

// goto vpss_start_error;

}

s32Ret = SAMPLE_COMM_VPSS_Start(VpssGrp, abChnEnable, &stVpssGrpAttr, astVpssChnAttr);

if (s32Ret != CVI_SUCCESS) {

printf("start vpss group failed. s32Ret: 0x%x !\n", s32Ret);

return CVI_FAILURE;

// goto vpss_start_error;

}

MMF_CHN_S stSrcChn = {

.enModId = CVI_ID_VDEC,

.s32DevId = 0,

.s32ChnId = VDEC_CHN0

};

MMF_CHN_S stDestChn = {

.enModId = CVI_ID_VPSS,

.s32DevId = VpssGrp,

.s32ChnId = 0

};

s32Ret = CVI_SYS_Bind(&stSrcChn, &stDestChn);

if (s32Ret != CVI_SUCCESS) {

printf("vpss group blind failed. s32Ret: 0x%x !\n", s32Ret);

return CVI_FAILURE;

}else{

printf("vpss group blind success!\n");

}

// s32Ret = CVI_SYS_GetBindbyDest(&stDestChn,&stSrcChn);

// if (s32Ret == CVI_SUCCESS) {

// printf("SYS BIND INFO:%d %d %d",stSrcChn.enModId,stSrcChn.s32DevId,stSrcChn.s32ChnId);

// }

return CVI_SUCCESS;

}

6.绑定VPSS通道与VB

将VPSS Grp各个通道与初始化VB各个池绑定起来。

CVI_U32 iVBPoolIndex = 0;

printf("Attach VBPool(%u) to VPSS Grp(%u) Chn(%u)\n", iVBPoolIndex, VPSS_GRP0, VPSS_CHN0);

s32Ret = CVI_VPSS_AttachVbPool(VPSS_GRP0, VPSS_CHN0, (VB_POOL)iVBPoolIndex);

if (s32Ret != CVI_SUCCESS) {

printf("Cannot attach VBPool(%u) to VPSS Grp(%u) Chn(%u): ret=%x\n", iVBPoolIndex, VPSS_GRP0, iVBPoolIndex, s32Ret);

goto vpss_start_error;

}

iVBPoolIndex++;

printf("Attach VBPool(%u) to VPSS Grp(%u) Chn(%u)\n", iVBPoolIndex, VPSS_GRP0, VPSS_CHN0);

s32Ret = CVI_VPSS_AttachVbPool(VPSS_GRP0, VPSS_CHN1, (VB_POOL)iVBPoolIndex);

if (s32Ret != CVI_SUCCESS) {

printf("Cannot attach VBPool(%u) to VPSS Grp(%u) Chn(%u): ret=%x\n", iVBPoolIndex, VPSS_GRP0, iVBPoolIndex, s32Ret);

goto vpss_start_error;

}

7.初始化各个线程

主要是发送码流的线程,bReleaseSendFrame负责判断其余线程是否结束,然后释放资源。

由于发送帧的线程总是会比其他线程结束的早,所以必须等待其它线程结束后再释放资源,否则会段错误。

在这里卡了好久,突然脑子转过来了XD。

ffmpeg相关的代码参考的是./ffmpeg/tests/api/api-h264-test.c,_sendStream2Dec负责将帧数据流信息存到VDEC_STREAM_S结构体中并发送给VDEC。

static void *sendFrame(CVI_VOID *pArgs){

// CVI_S32 s32Ret;

int _sendStream2Dec(AVPacket *pkt,CVI_BOOL bEndOfStream){

static CVI_S32 s32Ret;

static VDEC_STREAM_S stStream;

stStream.u32Len = pkt->size ;

stStream.pu8Addr = pkt->data ;

stStream.u64PTS = pkt->pts ;

stStream.bDisplay = true ;

stStream.bEndOfFrame = true ;

stStream.bEndOfStream = bEndOfStream ;

if(bEndOfStream){

stStream.u32Len = 0 ;

}

SendAgain:

s32Ret = CVI_VDEC_SendStream(VDEC_CHN0,&stStream,2000);

//VDEC可能会在忙 循环等待帧发送完毕即可

if(s32Ret != CVI_SUCCESS){

usleep(1000);//1ms

goto SendAgain;

}

return 1;

}

VDEC_THREAD_PARAM_S *pstVdecThreadParam = (VDEC_THREAD_PARAM_S *)pArgs;

const AVCodec *codec = NULL;

AVCodecContext *ctx= NULL;

AVCodecParameters *origin_par = NULL;

// struct SwsContext * my_SwsContext;

// uint8_t *byte_buffer = NULL;

AVPacket *pkt;

AVFormatContext *fmt_ctx = NULL;

int video_stream;

int byte_buffer_size;

int i = 0;

int result;

static int cnt = 0;

result = avformat_open_input(&fmt_ctx, pstVdecThreadParam->cFileName, NULL, NULL);

if (result < 0) {

av_log(NULL, AV_LOG_ERROR, "Can't open file\n");

pthread_exit(NULL);

}

result = avformat_find_stream_info(fmt_ctx, NULL);

if (result < 0) {

av_log(NULL, AV_LOG_ERROR, "Can't get stream info\n");

pthread_exit(NULL);

}

video_stream = av_find_best_stream(fmt_ctx, AVMEDIA_TYPE_VIDEO, -1, -1, NULL, 0);

if (video_stream < 0) {

av_log(NULL, AV_LOG_ERROR, "Can't find video stream in input file\n");

pthread_exit(NULL);

}

origin_par = fmt_ctx->streams[video_stream]->codecpar;

codec = avcodec_find_decoder(origin_par->codec_id);

if (!codec) {

av_log(NULL, AV_LOG_ERROR, "Can't find decoder\n");

pthread_exit(NULL);

}

ctx = avcodec_alloc_context3(codec);

if (!ctx) {

av_log(NULL, AV_LOG_ERROR, "Can't allocate decoder context\n");

return AVERROR(ENOMEM);

}

result = avcodec_parameters_to_context(ctx, origin_par);

if (result) {

av_log(NULL, AV_LOG_ERROR, "Can't copy decoder context\n");

pthread_exit(NULL);

}

result = avcodec_open2(ctx, codec, NULL);

if (result < 0) {

av_log(ctx, AV_LOG_ERROR, "Can't open decoder\n");

pthread_exit(NULL);

}

pkt = av_packet_alloc();

if (!pkt) {

av_log(NULL, AV_LOG_ERROR, "Cannot allocate packet\n");

pthread_exit(NULL);

}

printf("pix_fmt:%d\n",ctx->pix_fmt);

// byte_buffer_size = av_image_get_buffer_size(ctx->pix_fmt, ctx->width, ctx->height, 16);

byte_buffer_size = av_image_get_buffer_size( AV_PIX_FMT_RGB565LE, 480, 320, 16);

// byte_buffer = (uint8_t*)fbp;

// byte_buffer = av_malloc(byte_buffer_size);

printf("w:%d h:%d byte_buffer_size:%d\n",ctx->width,ctx->height,byte_buffer_size);

// if (!byte_buffer) {

// av_log(NULL, AV_LOG_ERROR, "Can't allocate buffer\n");

// pthread_exit(NULL);

// }

printf("#tb %d: %d/%d\n", video_stream, fmt_ctx->streams[video_stream]->time_base.num, fmt_ctx->streams[video_stream]->time_base.den);

i = 0;

result = 0;

// int i_clock = 0;

clock_t clock_arr[10];

clock_t time, time_tmp;

time = clock();

while (result >= 0 && !bStopCtl) {

clock_arr[0] = clock();

result = av_read_frame(fmt_ctx, pkt);

if (result >= 0 && pkt->stream_index != video_stream) {

av_packet_unref(pkt);

continue;

}

clock_arr[1] = clock();

time_tmp = clock();

while(time_tmp - time <= 20000){//控制发送速率

time_tmp = clock();

usleep(100);

}

printf("time:%f cnt:%d\n",(double)(time_tmp - time)/CLOCKS_PER_SEC,cnt);

time = time_tmp;

if (result < 0){

// result = _sendStream2Dec(pkt,true);

goto finish;

}

else {

if (pkt->pts == AV_NOPTS_VALUE)

pkt->pts = pkt->dts = i;

result = _sendStream2Dec(pkt,false);

cnt++;

}

av_packet_unref(pkt);

if (result < 0) {

av_log(NULL, AV_LOG_ERROR, "Error submitting a packet for decoding\n");

goto finish;

}

clock_arr[2] = clock();

clock_arr[3] = clock();

if(0)

printf("time=%f,%f,%f\n", (double)(clock_arr[1] - clock_arr[0]) / CLOCKS_PER_SEC,

(double)(clock_arr[2] - clock_arr[1]) / CLOCKS_PER_SEC,

(double)(clock_arr[3] - clock_arr[2]) / CLOCKS_PER_SEC);

i++;

}

finish:

bStopCtl = true;

while(!bReleaseSendFrame){

usleep(100000);

};

printf("Exit send stream img thread\n");

av_packet_free(&pkt);

avformat_close_input(&fmt_ctx);

avcodec_free_context(&ctx);

// av_freep(&byte_buffer);

// sws_freeContext(my_SwsContext);

pthread_exit(NULL);

}

其他就比较简单了,就不写太多了。

四、全部代码

代码有点多,字数超了,麻烦还是在github里看吧。

cvitek-tdl-sdk-sg200x/sample/cvi_tdl/sample_my_dec.c at main · PrettyMisaka/cvitek-tdl-sdk-sg200x (github.com)

五、结尾

使用硬件解码h264比使用ffmpeg解码快了几十倍还不止(废话),但是spi接口还是大大限制了刷新率,我打算后面换个rgb接口的屏幕再试试。Vdec的使用也是摸索了好一会,不过也很高兴自己最后做了这个小demo出来。欢迎提意见:D